Amazon Web Services allow you to do everything, so it is hard to figure out how to do anything. I have a node/angular/mongo stack running on Heroku, and want to use Amazon to store images. I was delighted to stumble across Direct to S3 Uploads in Node.js, written by Will Webberly for the Heroku Dev Center blog. Following his example, I was able to upload images directly from the browser to S3, saving my app server a whole lot of work.

In the example, when a user selects a file for upload, the browser asks the node server for a temporary signed request. The server replies with a signed url, and the browser can send the data directly to Amazon. TADA — Almost. With a few exceptions. One of the prerequisites is that you know how to set up an S3 bucket and IAM user with the correct access controls.

Setting the S3 stage

When S3 receives a request it must verify that the requester has the proper permissions — both at the account level, as well as at the bucket level. With a seemingly infinite number of services available through Amazon, I found that I had to aggregate information from a few different sources.

-

Create a bucket

- See this Amazon tutorial for how to create a bucket.

-

Create a user

- See this Amazon tutorial for creating an IAM User.

- Be sure to record the generated

Access Key IDandSecret Access Key. They will act as user name and password for accessing your bucket.

-

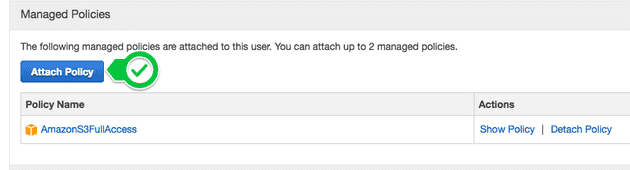

Grant the IAM User access to S3

-

Grant the IAM User access to the bucket

- Use this Amazon tool to help you generate an Access Control Policy. The key pieces you need for the policy generator are the AWS Principle (the user you created), which you enter in the format

arn:aws:iam::<your_account_number>:<IAM_user_name>, and the Resource (the bucket you created), which you enter in the formatarn:aws:s3:::<your_bucket_name>/*.

- Use this Amazon tool to help you generate an Access Control Policy. The key pieces you need for the policy generator are the AWS Principle (the user you created), which you enter in the format

{

"Version":"2012-10-17",

"Id": "Policy1234567890123",

"Statement":[{

"Sid":"Stmt123456789",

"Effect":"Allow",

"Principal": {

"AWS": "arn:aws:iam::111122223333:specialUser"

},

"Action":[

"s3:PutObject",

"s3:DeleteObject",

"s3:GetObject"

],

"Resource":"arn:aws:s3:::examplebucket/*"

}]

}In case you get lost, here is one more invaluable Amazon tutorial.

Angular client side

Using an angular app on the client side is also a slight deviation from the Webberly tutorial. Angular does not support an ng-change binding on file input elements, but there is a workaround:

<input type="file" class="form-control btn" id="image" onchange="angular.element(this).scope().s3Upload(this)">Additionally, instead of adding the upload function as plain javascript in your html template, add the s3Upload function onto the $scope in your controller.

$scope.s3Upload = function(){

var status_elem = document.getElementById("status");

var url_elem = document.getElementById("image_url");

var preview_elem = document.getElementById("preview");

var s3upload = new S3Upload({

s3_object_name: showTitleUrl(), // upload object with a custom name

file_dom_selector: 'image',

s3_sign_put_url: '/sign_s3',

onProgress: function(percent, message) {

status_elem.innerHTML = 'Upload progress: ' + percent + '% ' + message;

},

onFinishS3Put: function(public_url) {

status_elem.innerHTML = 'Upload completed. Uploaded to: '+ public_url;

url_elem.value = public_url;

preview_elem.innerHTML = '<img src="'+ public_url +'" style="width:300px;" />';

},

onError: function(status) {

status_elem.innerHTML = 'Upload error: ' + status;

}

});

};Note the diff on line 6 — taking a peek into the S3Upload source, you can set a custom file name by passing a s3_object_name option. Otherwise, every object will be named default_name.

$scope.s3_upload = function(){

...

var s3upload = new S3Upload({

s3_object_name: showTitleUrl(), // upload object with a custom name

file_dom_selector: 'image',

s3_sign_put_url: '/sign_s3',

...

}

function showTitleUrl() {

var title = $scope.show.title.split(' ').join('_');

var dateId = Date.now().toString();

return dateId + title;

}Add image to your schema

The last stumbling block I encountered was saving the image url into mongo. The image would upload and appear just fine, but wouldn’t persist the url as a property of the object. I had forgotten to add the image to my mongo Schema — the schema acts a bit like a whitelist for saving attributes through mongoose into mongo. Adding the imageUrl to the schema solved all my woes.

If you are trying to accomplish something similar, Heroku has articles on uploading directly to S3 using many different stacks.